STUDY DESIGN CONSIDERATIONS

The study is an empirical and baseline investigation into formative uses of software systems to support the instruction of writing in a classroom setting. We are limiting the products for consideration to those that include a feature of automated feedback to teachers, students and others supporting the instruction of writing in a classroom setting. As such, it seeks to answer a central question:

Can software support the instruction of writing in a classroom setting?

Background

Providers of software, designed to support the instruction of writing, have been competing for space within schools for decades. For many, success has been measured in increments, such as the number of students or teachers using an application. This race to build traction within schools has led to a fragmented and largely misunderstood marketplace; there has been no comprehensive investigation into the potential of these systems, and most of the literature reads as promotional for some product or provider. The study is designed to offer a fair, impartial and balanced investigation into the current capabilities of selected systems, so that diverse groups of students, parents, administrators, and teachers can better understand where and how software can play a role in supporting the instruction of writing.

As schools shift to online assessment over the next few years, computers will play a larger role in both how students are tested and how they prepare for those tests. This presents a unique opportunity to inform school administrators, teachers and students of the potential to support new instructional demands. At a time when the use of computers in classrooms is scheduled to change dramatically, a rigorous and unbiased study of tools that are specifically designed to support those new demands will benefit both providers and consumers of those services.

Finally, the study is specifically focused on how software can support writing instruction. We know that students are writing less today. We also know that demands placed on teachers are a critical factor in addressing how much writing is assigned. Put simply, as classroom sizes are growing, the level of detailed feedback provided to each student/writer is falling. Software can play a vital role to address those workload constraints, and teachers need more information to understand their potential.

Conceptual Framework and Sampling Plan Identification

The study will yield multiple sources of evidence. As such, every effort will be made to address the following: the selection of classrooms adhering to common practices of writing instruction for both intervention and control groups; encouraging engagement of software in the service of writing instruction; identification of meaningful outcome variables for the study; and, the protection of human subjects.

Based on conceptualizations of validity, the design of the study is focused on the best ways, constrained by time and resources, to gather evidence for the selected products, including but not limited to those implications of the product usage, generalizations that might be made from each product's unique capabilities, extrapolations of data output interpretations, and theory-based interpretations of the findings.

Sources of Validity Evidence

The general model in Table 1 provides the study considerations to establish kinds of validity evidence and their sources:

Table 1: Sources of Validity Evidence

Sources | Elements of Study Design |

Construct modeling | Down-selection of vendors to validate study design requirements. Investigation of platform use data to validate alignment with variable considerations. |

Response processes | Surveys and/or semi-structured interviews with administrators, teachers, students. |

Scoring | Ability of products to score student writing samples in ways similar to humans. |

Disaggregation | Descriptive reporting of product performance(s) and other measures of writing performance, with attention to reporting in terms of ESL, ELL, Special Education, gender and race/ethnicity, if necessary. |

Extrapolation | Convergent and divergent sources of evidence based on performance and attitudinal variables. |

Generalization | Relationship between construct coverage through performance and attitudinal variables and the target of the construct covered by the selected products |

Theory-based interpretation | Analysis of evidence based on concurrent research on similar products by the educational measurement and writing communities; interpretation of findings in light of that research. |

Consequence | Impact of the system within the sampling plan. |

Null Hypothesis Significance Testing (NHST)

The following statements were drafted with three intended study outcome determinants:

- Increase the quality and quantity of writing by students;

- Support teachers to meet identified performance goals for writing instruction;

- Match the needs of students and teachers within the constraints of typical school environments.

Stated as two-tailed hypotheses (itself a signal that the positive or negative direction of each hypothesis is unknown), the study aims may be expanded and expressed as follows:

- NHST Statement 1. Writing Quality

- H0 selected products will not increase the quality of writing by students at levels of significant difference.

- HA selected products will increase the quality of writing by students at levels of significant difference.

- NHST Statement 2. Writing Quantity

- H0 selected products will not increase the quantity of writing by students at levels of significant difference.

- HA selected products will increase the quantity of writing by students at levels of significant difference.

- NHST Statement 3. Teacher Support

- H0 selected products will not support teachers to meet identified performance goals for writing instruction at levels of significant difference.

- HA selected products will support teachers to meet identified performance goals for writing instruction at levels of significant difference.

- NHST Statement 4. Alignment of Needs

- H0 selected products will not match the needs of students and teachers within the constraints of typical school environments at levels of significant difference.

- HA selected products will match the needs of students and teachers within the constraints of typical school environments at levels of significant difference.

The study will establish meaningful measures of significant difference that are appropriate to the proposed design. For example, the H0 for NHST Statement 1 of writing quality may be rejected if the established level of statistical significance (p < .05) is met in a carefully controlled sample of students whose writing is assessed by a common set of variables. Other appropriate and known standards by which the NHSTs can be rejected will be detailed as the study variables are finalized.

Expressing the study design according to NHSTs will additionally help to ensure that causation, where appropriate, is established through investigation of co-variation, spurious variable relationships, logical time order, and theory explanation.

Study Variables

The study will focus on both quantitative and qualitative measures of usage and performance. Beginning with quantitative measures, we will determine which standard data can be uniformly extracted from the participating products before finalizing the common variables. Where we intend to apply some common measure that one or more systems are not designed to generate, we will work with those providers to develop protocols that are fair or that would not corrupt any indication of specific use or performance. Second, as we finalize the qualitative measures, we intend to employ surveys, semi-structured interviews and forums for discussion, to ensure that any naturalistic components of the study design are aligned with the specific capabilities of the selected products and the intended goals of the study. Because we are selecting specific products to implement in the study, we will invite those product providers into the final design considerations before releasing publicly specific metrics and protocols for gathering the data.

During the investigation into common variables against which product performance(s) can be divided, a clear distinction emerges between those factors measuring a student's performance as a writer and a student's attitude towards writing. The study is intended to consider both. However, it is important to note that a unifying validation framework extends beyond any simple assessment. Writing is a complex and human undertaking. Therefore, it is essential that measures of product usage and performance extend beyond mere grading or scoring. The Working Group seeks to understand whether or not selected products can impact a deeper understanding of writing, and that goal is central to our investigation.

Performance Variables

The initial approach to performance variables is found in the Working Group's commitment to the following statement:

"We do not intend to develop a study to demonstrate long-term or longitudinal outcomes for academic achievement."

However, as the investigation into performance variables has revealed, there are many approaches to measuring use of the selected products to provide an accurate assessment of impact on writing ability without requiring overly burdensome methods.

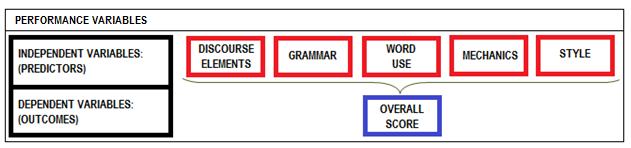

Based on a review of the relevant literature and an assessment of exemplar software systems already demonstrated to the Working Group, a visual representation of the writing construct as it is represented in many applications is presented here. In the model, discourse elements, grammar, word use, mechanics, and style represent the independent (or predictor) variables that contribute to one dependent (or outcome) variable, such as an overall score. The outcome (criterion) variables for vendor products might include the following: an assessment of strength of organization and development, writing productivity, error rates, types of errors, scores from traits, and a holistic (or composite) score. Those variables may be applied both to pre-writing tasks, as well as final writing submissions. Following the selection of the participating products, we will investigate how these (or other) variables may apply (and the data that can be extracted from those selected systems).

Some products can provide measures of over 120 writing outcome variables. As those final variables are chosen, it will be important to determine that outcomes are common across the vendor products and associated with meaningful dimensions of the writing construct. Additionally, some thought might be given to including additional outcome measures, such as grades in the relevant English Language Arts (ELA) course or scores on high-stakes state summative assessments in writing. In other words, any assessment of the products reduced to "score" should also consider outcomes that extend beyond variables generated by the product.

Finally, "scoring capabilities" of the products are not deemed as more important or final considerations of performance. Giving students a safe harbor to draft, re-draft, consider, submit, discuss, and re-submit in a recursive manner is an essential aspect of best practices in writing instruction. Our metrics (and the order of our attention to them) will mirror those priorities. Therefore, whether or not measures of the independent variables can be reduced to an outcome, such as the scoring, will be complimented by other considerations.

Attitudinal Variables

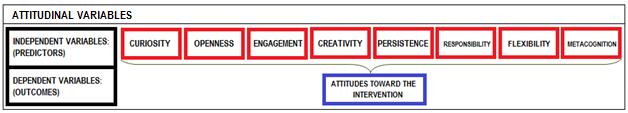

Many important variables affecting writing instruction are understood as reflecting certain habits of mind among students, based on the Big Five Personality factors (see below). Here, the Working Group takes its model from the Framework for Success in Postsecondary Writing. A core document of the writing community, the Framework was developed collaboratively with representatives from the Council of Writing Program Administrators, the National Council of Teachers of English, and the National Writing Project. Defined as "ways of approaching learning that are both intellectual and practical" the habits of mind include the following: curiosity (the desire to know more about the world); openness (the willingness to consider new ways of being and thinking); engagement (a sense of investment and involvement in learning); creativity (the ability to use novel approaches for generating, investigating, and representing ideas); persistence (the ability to sustain interest in and attention to short and long-term projects); responsibility (the ability to take ownership of one's actions and understand the consequences of those actions for oneself and others); flexibility (the ability to adapt to situations, expectations, or demands); and, metacognition (the ability to reflect on one's own thinking as well as on the individual and cultural processes and systems used to structure knowledge).

Using such methods as surveys, and/or semi-structured interviews and/or system usage data, the Working Group seeks to measure important insights into whether products can support deep measures of engagement among student writers.

Trend Identification & Analysis

The combination of attitudinal and performance variables allows for a rich source of validation evidence. During the process of collecting evidence, we will also produce data that can be used for statistical modeling, to identify or predict trends and patterns that may reveal new findings. Combined with surveys of administrators, teachers, and students, both variables can allow the research team to gather important sources of information. Such analyses will yield a more robust representation of the study variables.

Intervention and Control Group Identification

The study will include both a set of classrooms acting as the intervention group (e.g., those assigned selected products) and the control group (e.g., those not using selected products but using a common practice orientation to instruction and assessment). Because of the variability of product requirements, assignments may be contained by a particular geographic area or other consideration, depending upon the provider, to ensure that software support and any necessary in-service training can be offered. If support is not an issue, then the assignment of classes to each selected product can be accomplished by random assignment.

Under known constraints, every effort will be made to approximate matching groups based on student populations and distributions by geographic and demographic considerations, including attention to such variables as students as English Language Learners (ELL), English as a Second Language (ESL), Special Education Students (SES), student demographics, including but not limited to gender/race/ethnicity, and a meaningful, language arts related measure on a national, regional, or school-based assessment.

We will seek to finalize other appropriate considerations, such as grade level(s) where students are both best able to use the technology (e.g., computer literate) and where writing growth is most likely to occur. Students in grades 6th-10th are grade-level candidates. We will work with the selected product providers and state partners to determine the final grade-level considerations.

Intervention Intensity

The need to fairly assess the selected products implies an inherent tension whenever the study requires some shift between certain variables related to usage and product performance. In order to give the selected products the best chance of showing an intervention to control contrast, if one exists, the intensity of product use is directly related to the validity of the product performance; as students use the product more intensely, opportunities to attribute shifts in the performance of student writing to product usage increase. However, by increasing the intensity of use we also run the risk of disrupting curricula and classroom processes intended to promote teacher and peer responses to writing. The study seeks to identify where product integration can lead to stronger outcomes within the framework of NHST Statements 1-4, but product integration cannot imply either supplanting the role of the teacher or forcing uniformity of instruction where such standardization may threaten student outcomes.

Teachers and students in the intervention group will be provided an overview of the select product and then participate in a specific duration of classroom integration (e.g., one grading period) that would include writing against some pre-determined set of uniform prompts or writing tasks, that are administered in the vendor product environment, some portion of which would require opportunities for multiple revisions. Ideally, intervention groups would utilize a minimum of three modules that address pre-writing tools integrated into the vendor's product. Students in both the intervention and control groups would write against three uniform prompts that would be administered at the beginning of the intervention, in the middle, and at the conclusion. We may be able to select prompts from the libraries of participating vendors (if the vendors are willing to share data with the other selectees), but more importantly we will select those interventions which present as little disruption to the classroom curricula and the teachers' role as the writing instructor as possible. While the discourse mode of the uniform prompts is to be determined, a common framework will be used to ensure that the variable of discourse mode — exposition, description, narrative, argumentative, or another defined mode — is consistent across assessment occasions.

For those in the intervention group, any remaining use of the selected products can either be generated by the teacher introducing his or her own prompts and the generic norms supplied by the vendor (the assumption here is that the vendor has these available) or the use of prompts that are available from the vendor's library. Because uneven use of other activates in the selected products may also contribute complexity to the study, use of pre-writing activities should also be determined.

There are several advantages to employing prompts over other possible writing assignments. First, their relatively short length means that teachers can incorporate them in a more flexible way than committing to a longer writing project such as a term paper or report. This advantage would reduce curricular impact, though they may be similar to what teachers are already using in preparing for statewide high-stakes assessments. Second, the selected products will likely include a number of calibrated prompts, representing the various discourse modes, from which teachers can choose. Third, if teachers elect not to incorporate the vendor-calibrated prompts, they can create their own and apply generic norms for providing feedback to students. Fourth, norms exist that can be applied to both the control and experimental groups. Finally, prompts represent a relatively uniform activity that would permit teachers to be able to monitor writing growth over time.

As the Working Group continues to investigate the variables related to usage and product performance, here are some of the intensity levels that are under consideration to validate product performance.

Number of common prompts assigned during the study duration: 4-12

Number of benchmark prompts to establish norms of performance: 3 (beginning, middle, end)

Number of required pre-writing tasks during the course of the study: 3-7

These are not recommended levels of intensity; instead, they are ranges that the Working Group will investigate following the selection of the products. Here, it is important to note that even where the Working Group may agree upon ranges of recommended intensity, ultimately the influence of the study will depend upon the preferences of the teachers in the classroom. Therefore, perhaps the most important element of the study will be determined by the degree to which usage is condoned, established or promoted within classroom conditions. The study intends to deliver teacher and student surveys to gauge these variables.

Protection of Human Subjects

The study will adhere to existing rules and requirements within participating schools. In addition, the Working Group will install stop protocols in the event that any intervention is associated with an interruption of classroom instruction that might be detrimental to student achievement. Once the products have been selected, the Working Group will determine those signals which would trigger the stop protocols. They may include both qualitative and quantitative measures of usage or performance. In any case, final determinations for either introducing products into classrooms or prematurely stopping use of any product during the study will be made by each participating school.

Study Outcomes

The Working Group has synthesized a broad range of interests, to ensure that all shareholders of the study are aligned with what these considerations can (and will not) accomplish. Such preliminary agreement will allow two written reports following the study, identified as the study outcomes, to be presented to minimize disagreement about study results and recommendations.

The study will produce a written report on the findings and a series of recommendations based on the study outcomes available to all stakeholders. Those stakeholders will include the participating vendors and/or larger marketplace of product providers, whereby the research team may identify areas for improvement among current capabilities; it will also include school districts and school administrators, responsible for determining use of such systems, as well as teachers, students and families, to broaden the understanding of the selected products. By focusing on these audiences, the research team intends to generate further discussion among legislators, education policy experts and other research initiatives, to encourage follow-on studies and investigations into the use of such products. All study outcomes will be web-based and thus publically available.